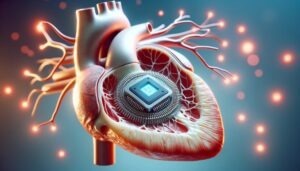

Can ChatGPT Transform Cardiac Surgery and Heart Transplantation?

This article explores the role of artificial intelligence, specifically ChatGPT and generative pre-trained transformers, in cardiac surgery and heart transplantation. It discusses the potential benefits of AI in enhancing clinical care, decision-making, training, research, and education. However, it also cautions against risks related to validation, ethical challenges, and medicolegal concerns. ChatGPT is presented as a tool to support surgeons, not replace them, emphasizing the importance of human oversight and the nuanced understanding of patient-specific circumstances.